Have you ever wanted to supercharge your data modeling with Generative AI but faced restrictions due to your company’s strict data privacy policies?

In this article, we’ll explore a secure and efficient alternative: integrating DeepSeek, an open-source local Large Language Model (LLM), with LM Studio. This combination allows you to automate your data modeling workflow and ensure compliance with data privacy regulations.

Why Choose a Local Environment?

Data security and compliance are critical concerns for businesses. Using a local Generative AI solution ensures that sensitive information never leaves your company’s network, greatly reducing exposure to external threats.

Setting Up LM Studio with DeepSeek

To begin, you’ll need to install LM Studio integrated with DeepSeek in your secure local environment. Follow these simple steps:

- Download LM Studio here.

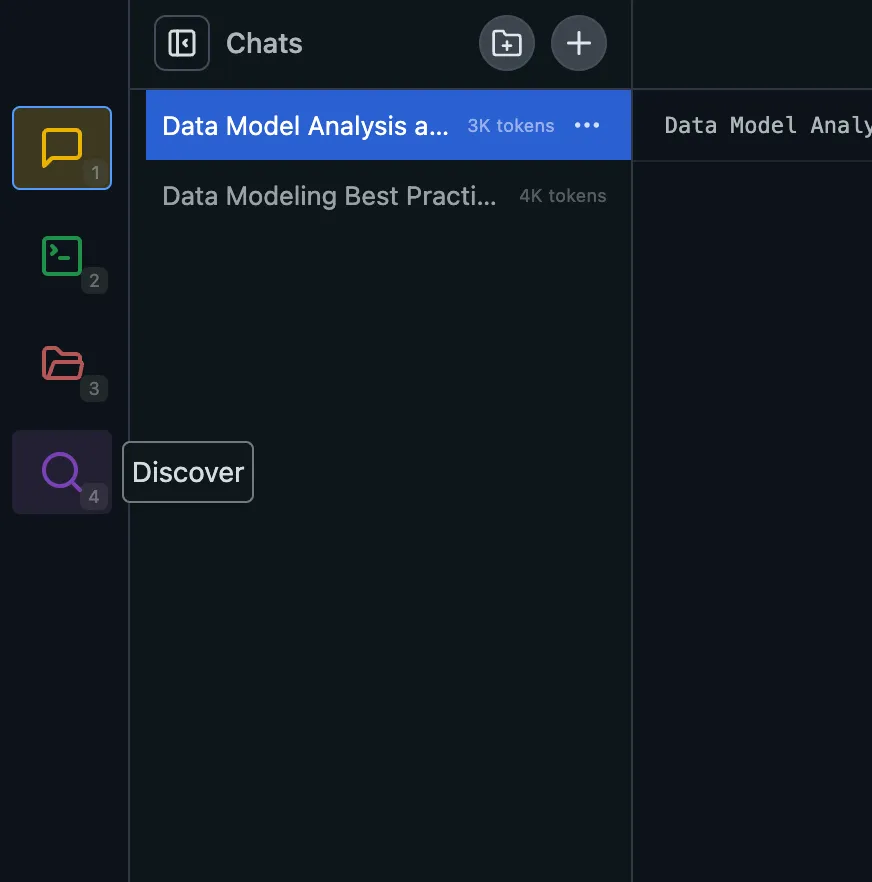

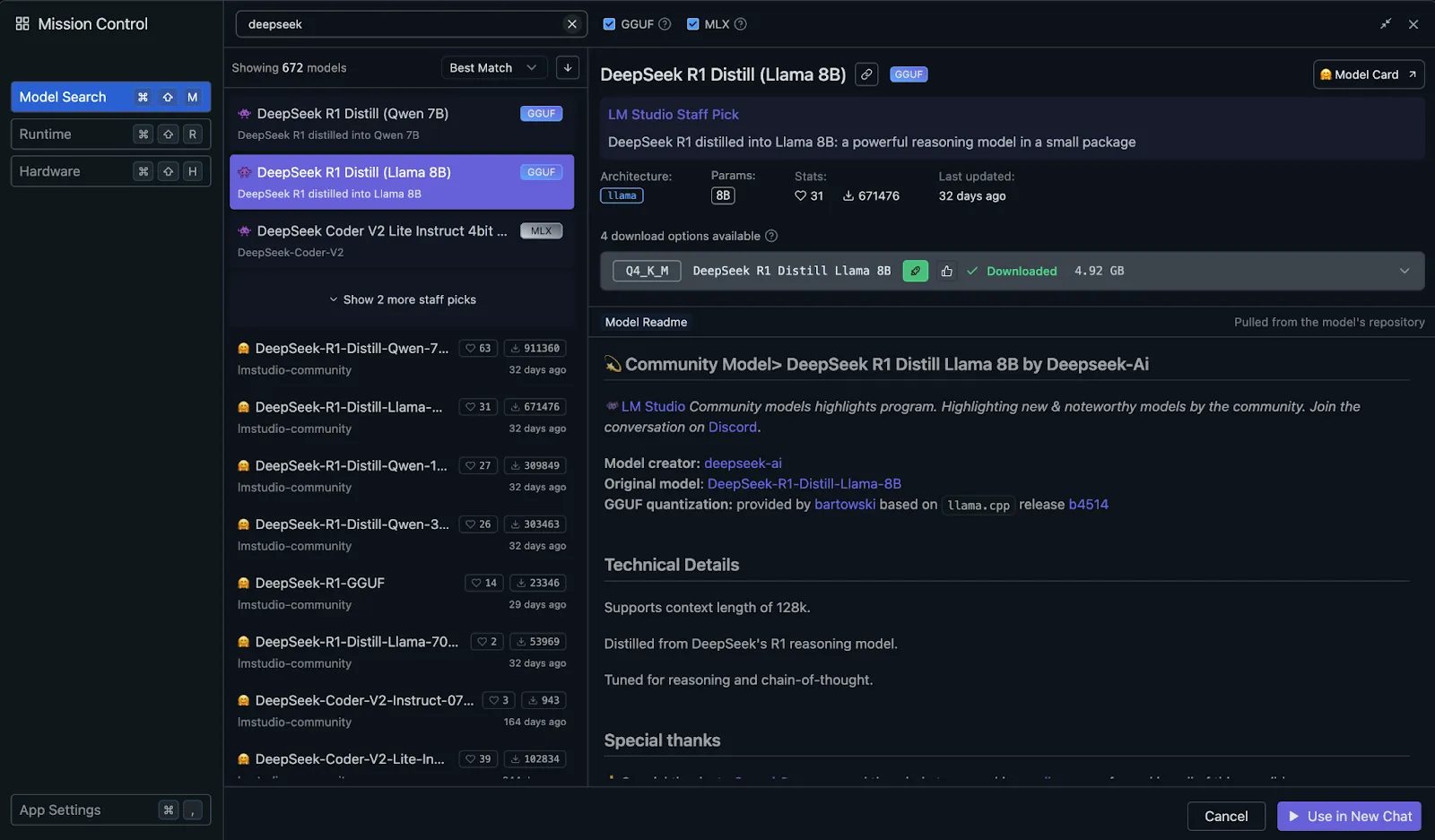

- Upon installation, LM Studio suggests DeepSeek R1 Distilled (Qwen 7B), suitable for low-end systems. However, it’s recommended to use the more robust Llama version 8B instead in the Discover option in LM Studio

Search for DeepSeek model and download ollama run deepseek-r1:8b (requires approximately 4.9GB of memory):

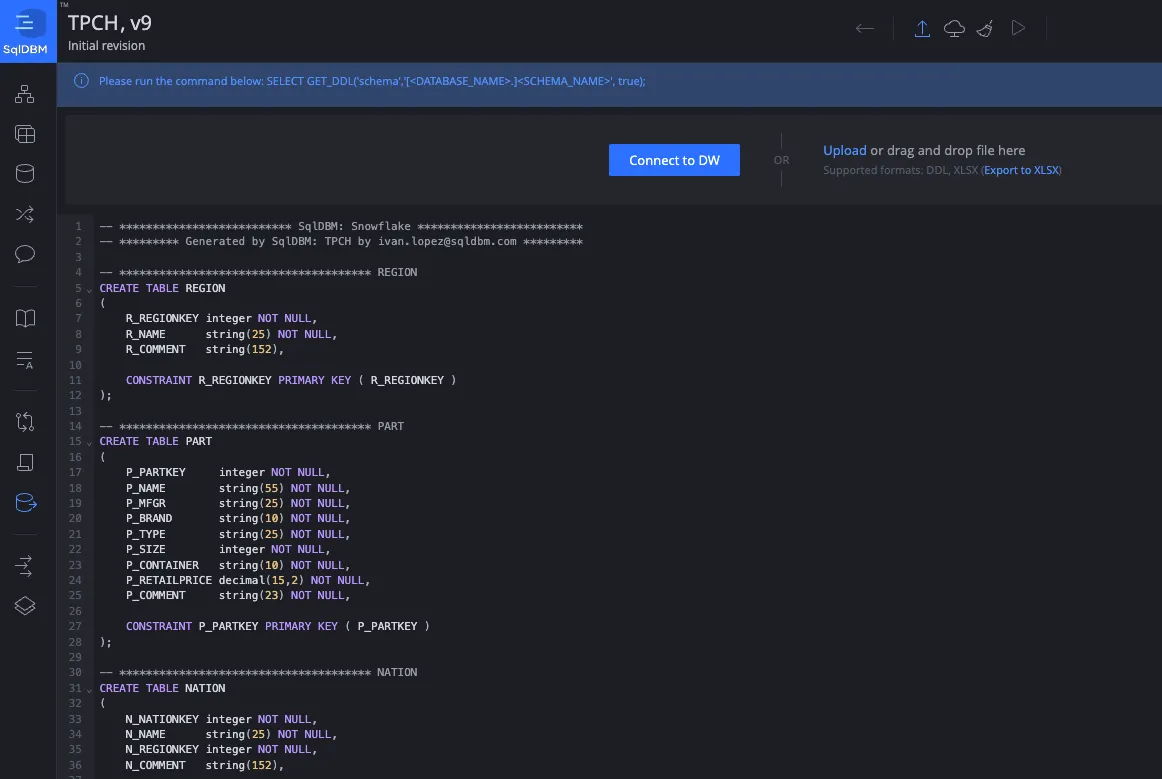

Creating Your Data Model with SqlDBM

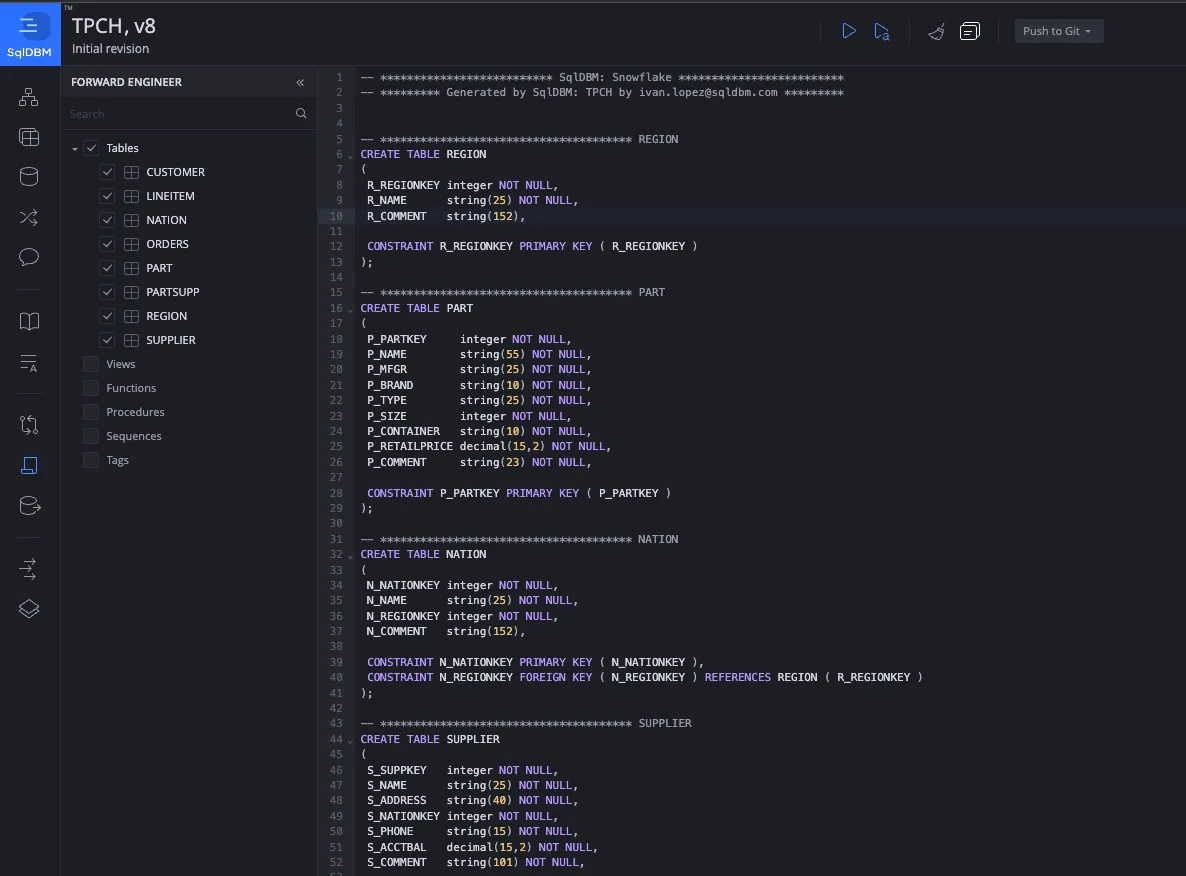

Design your data model using SqlDBM.

For this demonstration, we utilized the TPCH model from Snowflake, a robust industry-standard test case.

Once your model is complete, perform forward engineering to generate the corresponding Data Definition Language(DDL) code. Save this output to a file.

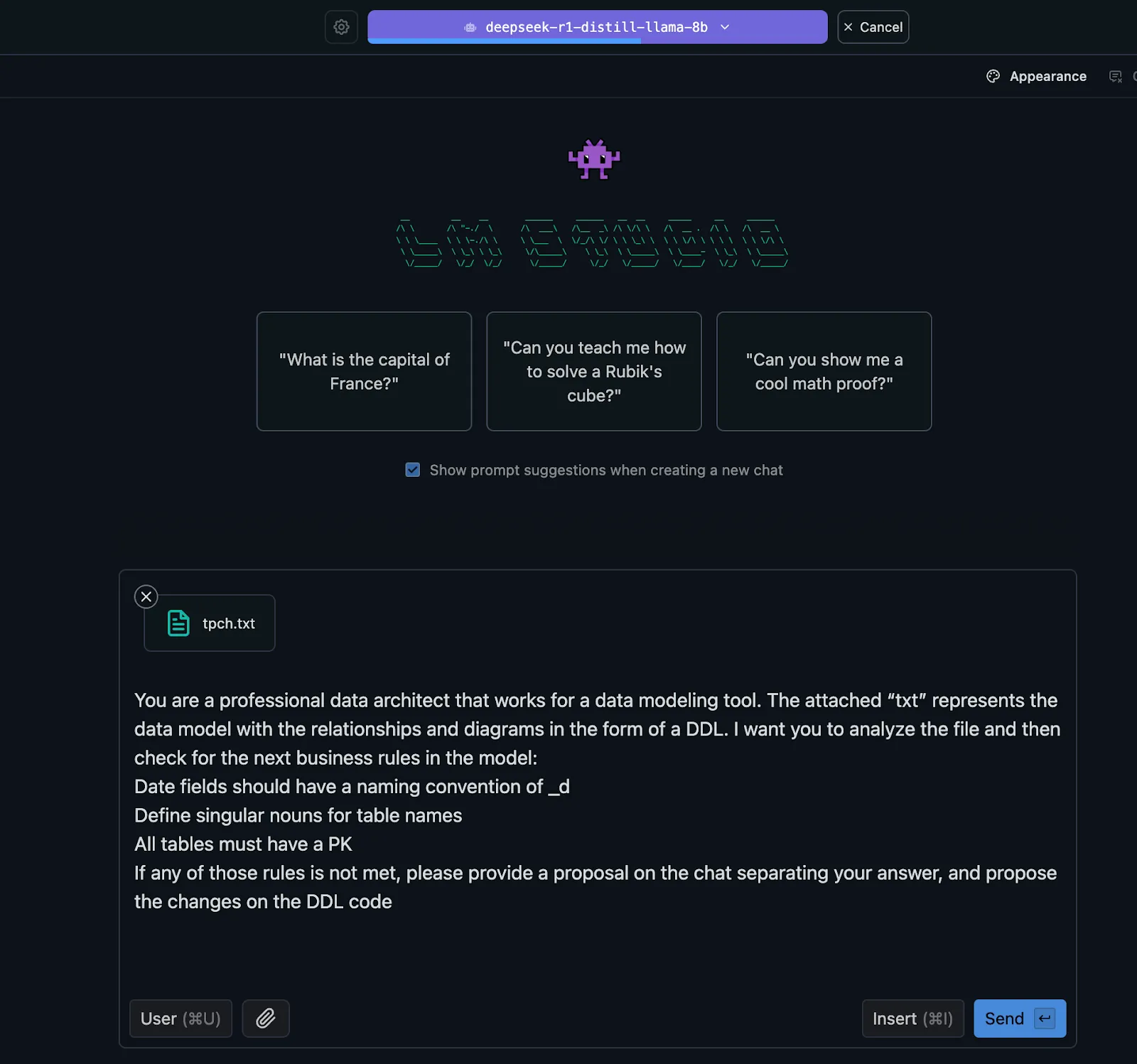

Validating Business Rules with LM Studio

Now comes the exciting part: using your local LLM to validate your data model against key business rules.

Use the following prompt in LM Studio to validate your model against essential business rules and add the previous DDL file:

“You are a professional data architect who works for a data modeling tool. The attached “txt” represents the data model with the relationships and diagrams in the form of a DDL. I want you to analyze the file and then check for the next business rules in the model:

1. Date fields types should have a naming convention of _D

2. Table names are defined as singular nouns

3. All tables must have a PK constraint

If any of these rules is not met, please provide a detailed proposal for all changes that need to be done in the form of a complete DDL”

Example Results

Upon testing, LM Studio identified key issues, such as incorrect naming conventions (O_ORDERDATE, L_SHIPDATE, etc.), plural table names (ORDERS, LINEITEM), and inadequate primary keys (LINEITEM).

The AI promptly generated corrected DDL code, addressing all business rule violations efficiently.

— ************************** SqlDBM: Snowflake *************************

— ********* Generated by SqlDBM: TPCH by ivan.lopez@blog.sqldbm.com *********

— ************************************** REGION

CREATE TABLE REGION

(

R_REGIONKEY integer NOT NULL,

R_NAME string(25) NOT NULL,

R_COMMENT string(152),

CONSTRAINT R_REGIONKEY PRIMARY KEY ( R_REGIONKEY )

);

— ************************************** PART

CREATE TABLE PART

(

P_PARTKEY integer NOT NULL,

P_NAME string(55) NOT NULL,

P_MFGR string(25) NOT NULL,

P_BRAND string(10) NOT NULL,

P_TYPE string(25) NOT NULL,

P_SIZE integer NOT NULL,

P_CONTAINER string(10) NOT NULL,

P_RETAILPRICE decimal(15,2) NOT NULL,

P_COMMENT string(23) NOT NULL,

CONSTRAINT P_PARTKEY PRIMARY KEY ( P_PARTKEY )

);

— ************************************** NATION

CREATE TABLE NATION

(

N_NATIONKEY integer NOT NULL,

N_NAME string(25) NOT NULL,

N_REGIONKEY integer NOT NULL,

N_COMMENT string(152),

CONSTRAINT N_NATIONKEY PRIMARY KEY ( N_NATIONKEY ),

CONSTRAINT N_REGIONKEY FOREIGN KEY ( N_REGIONKEY ) REFERENCES REGION ( R_REGIONKEY )

);

— ************************************** SUPPLIER

CREATE TABLE SUPPLIER

(

S_SUPPKEY integer NOT NULL,

S_NAME string(25) NOT NULL,

S_ADDRESS string(40) NOT NULL,

S_NATIONKEY integer NOT NULL,

S_PHONE string(15) NOT NULL,

S_ACCTBAL decimal(15,2) NOT NULL,

S_COMMENT string(101) NOT NULL,

CONSTRAINT S_SUPPKEY PRIMARY KEY ( S_SUPPKEY ),

CONSTRAINT S_NATIONKEY FOREIGN KEY ( S_NATIONKEY ) REFERENCES NATION ( N_NATIONKEY )

);

— ************************************** CUSTOMER

CREATE TABLE CUSTOMER

(

C_CUSTKEY integer NOT NULL,

C_NAME string(25) NOT NULL,

C_ADDRESS string(40) NOT NULL,

C_NATIONKEY integer NOT NULL,

C_PHONE string(15) NOT NULL,

C_ACCTBAL decimal(15,2) NOT NULL,

C_MKTSEGMENT string(10) NOT NULL,

C_COMMENT string(117) NOT NULL,

CONSTRAINT C_CUSTKEY PRIMARY KEY ( C_CUSTKEY ),

CONSTRAINT C_NATIONKEY FOREIGN KEY ( C_NATIONKEY ) REFERENCES NATION ( N_NATIONKEY )

);

— ************************************** PARTSUPP

CREATE TABLE PARTSUPP

(

PS_PARTKEY integer NOT NULL,

PS_SUPPKEY integer NOT NULL,

PS_AVAILQTY integer NOT NULL,

PS_SUPPLYCOST decimal(15,2) NOT NULL,

PS_COMMENT string(199) NOT NULL,

CONSTRAINT PK_1 PRIMARY KEY ( PS_PARTKEY, PS_SUPPKEY ),

CONSTRAINT PS_PARTKEY FOREIGN KEY ( PS_PARTKEY ) REFERENCES PART ( P_PARTKEY ),

CONSTRAINT PS_SUPPKEY FOREIGN KEY ( PS_SUPPKEY ) REFERENCES SUPPLIER ( S_SUPPKEY )

);

— ************************************** ORDER

CREATE TABLE “ORDER”

(

O_ORDERKEY integer NOT NULL,

O_CUSTKEY integer NOT NULL,

O_ORDERSTATUS string(1) NOT NULL,

O_TOTALPRICE decimal(15,2) NOT NULL,

O_ORDER_D date NOT NULL, — renamed from O_ORDERDATE to O_ORDER_D for naming convention compliance

O_ORDERPRIORITY string(15) NOT NULL,

O_CLERK string(15) NOT NULL,

O_SHIPPRIORITY integer NOT NULL,

O_COMMENT string(79) NOT NULL,

CONSTRAINT O_ORDERKEY PRIMARY KEY ( O_ORDERKEY ),

CONSTRAINT O_CUSTKEY FOREIGN KEY ( O_CUSTKEY ) REFERENCES CUSTOMER ( C_CUSTKEY )

);

— ************************************** LINE_ITEM

CREATE TABLE LINE_ITEM

(

L_ORDERKEY integer NOT NULL,

L_LINENUMBER integer NOT NULL,

L_QUANTITY decimal(15,2) NOT NULL,

L_EXTENDEDPRICE decimal(15,2) NOT NULL,

L_DISCOUNT decimal(15,2) NOT NULL,

L_TAX decimal(15,2) NOT NULL,

L_RETURNFLAG string(1) NOT NULL,

L_LINESTATUS string(1) NOT NULL,

L_SHIP_D date NOT NULL, — renamed from L_SHIPDATE to L_SHIP_D for naming convention compliance

L_COMMIT_D date NOT NULL, — renamed from L_COMMITDATE to L_COMMIT_D for naming convention compliance

L_RECEIPT_D date NOT NULL, — renamed from L_RECEIPTDATE to L_RECEIPT_D for naming convention compliance

L_SHIPINSTRUCT string(25) NOT NULL,

L_SHIPMODE string(10) NOT NULL,

L_COMMENT string(44) NOT NULL,

PS_PARTKEY integer NOT NULL,

PS_SUPPKEY integer NOT NULL,

CONSTRAINT PK_LINE_ITEM PRIMARY KEY ( L_ORDERKEY, L_LINENUMBER ),

CONSTRAINT L_ORDERKEY FOREIGN KEY ( L_ORDERKEY ) REFERENCES “ORDER” ( O_ORDERKEY ),

CONSTRAINT L_PARTKEY FOREIGN KEY ( PS_PARTKEY, PS_SUPPKEY ) REFERENCES PARTSUPP ( PS_PARTKEY, PS_SUPPKEY )

);

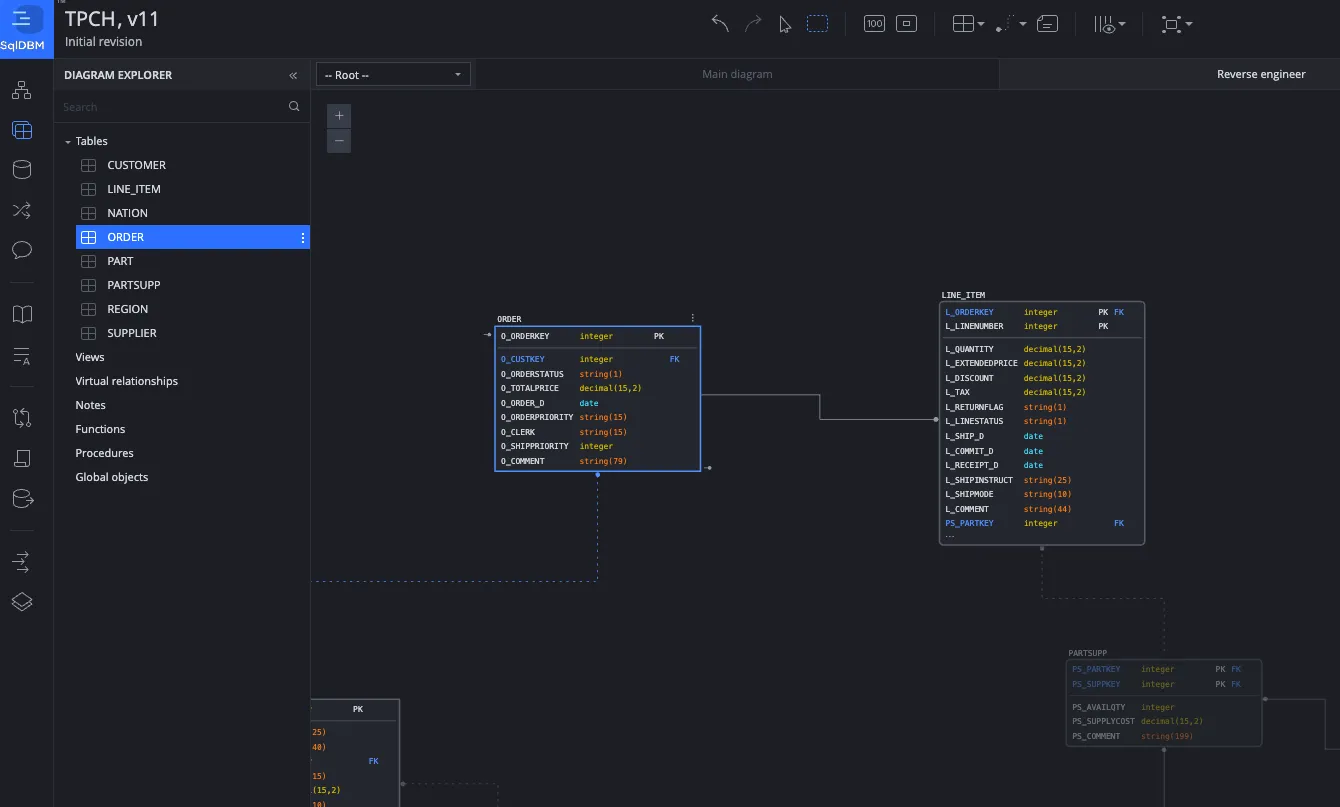

Now, let’s put this DDL back to work in SqlDBM.

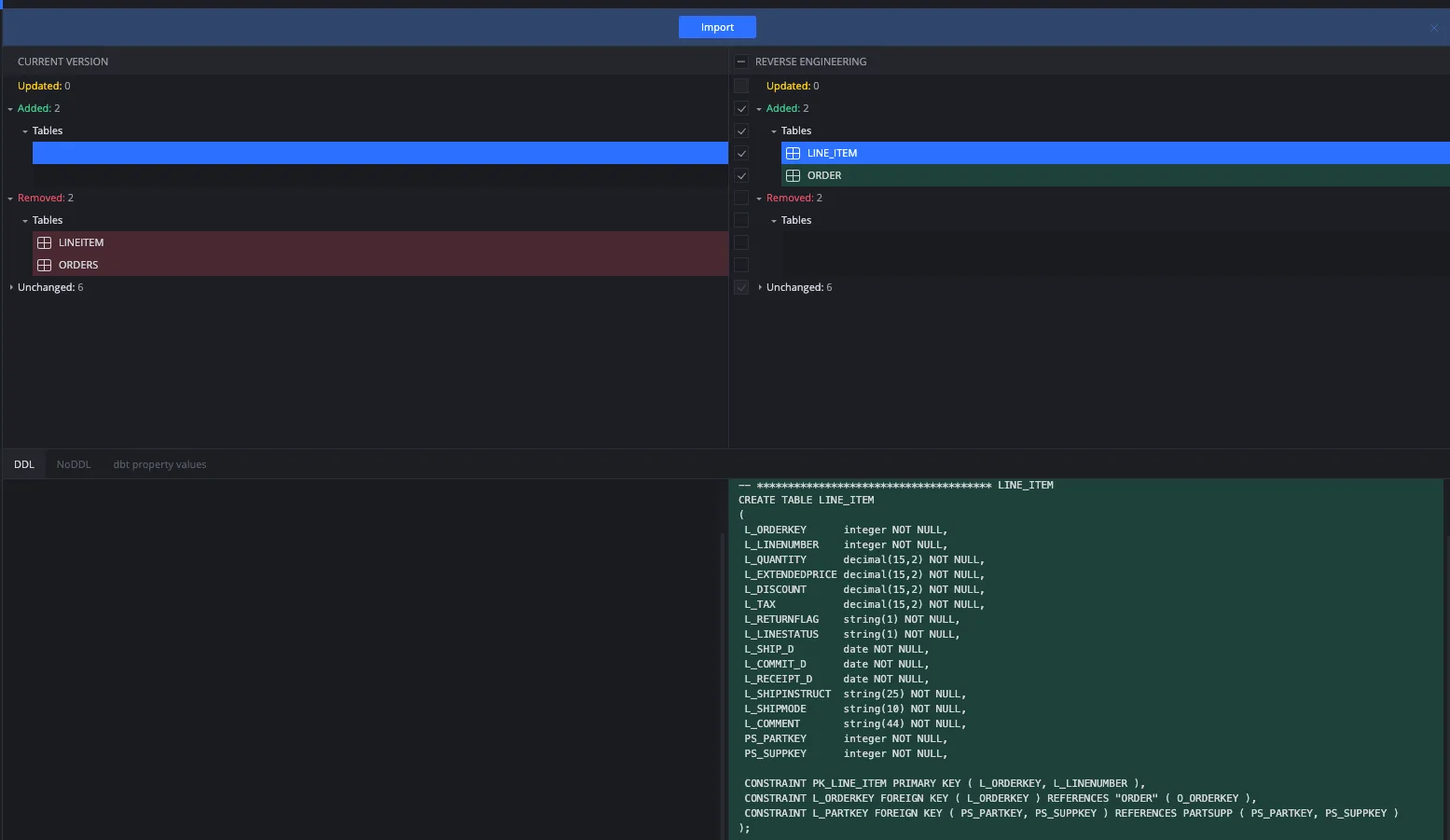

Reverse Engineering and Model Comparison

Next, reverse engineer the AI-corrected DDL back into SqlDBM using its built-in functionalities.

This functionality also allows you to easily compare the original and modified models side by side, ensuring alignment with business requirements.

And voilá, your new model is up to date with your business requirements.

Conclusions

Incorporating Generative AI into your data modeling process doesn’t have to come with a heavy risk to data privacy. By leveraging a local solution like DeepSeek with LM Studio, you can:

- Automate tedious data modeling processes.

- Ensure strict compliance with data privacy.

- Quickly validate and enforce business rules.

- Maintain control over your sensitive data.

This approach empowers data modelers, architects, and engineers to harness Generative AI’s potential without compromising security or compliance.

Thanks for reading!